Stop Calling Them Pilots: The CXO’s AI Manifesto

Part 1 of a Five-Part Series in Preparation for the Biarritz Executive Retreat

If you're outsourcing strategic thinking to consultants who've never built anything in your business, you've already running a race you can’t win.

⚡ The Fundamental Problem

After analyzing dozens of AI transformations—from insurance firms achieving 18% efficiency gains to Fortune 500 companies stuck in expensive pilot purgatory—one pattern is unmistakable: Organizations where CXOs personally own AI transformation succeed. Those that delegate to consultants fail.

The failure autopsies reveal: talented technical teams, adequate budgets, executive enthusiasm at launch—and a near zero measurable business impact six months later. The problem wasn't technology. The problem was absence of leadership.

You didn't reach the C-suite by waiting for playbooks. You got there by understanding your business better than anyone else. Yet the moment "AI transformation" hits the agenda, brilliant CXOs suddenly become spectators, hiring consultants who've never run anything in their industry, waiting for best practices from vendors, deferring to technical teams who understand algorithms but not business value.

🎯** Why 2026 Separates Leaders from Delegators** Collaborative intelligence compounds exponentially. Every quarter spent in pilot purgatory doesn't put you one quarter behind—it puts you exponentially into irrelevance. Organizations building institutional fluency around human-AI collaboration aren't just incrementally better—they're developing advantages competitors can't copy by purchasing the same models.

CXOs preparing for 2026 aren't asking "What should we do with AI?" They're asking:

• How do I architect human and machine intelligence permeating my organization?

• What decisions can we delegate intelligently to autonomous systems?

• How do I build corporate learning that accelerates with each AI interaction?

• Where do I need human intelligence and judgment more, not less?

These are questions consultants can't answer credibly. That's your place at the table.

💡** Five Execution Imperatives** Based on transformations across financial services, healthcare, manufacturing, and technology—here's what separates leaders who own their AI future from those who outsource it:

🎯** Define North Star Metrics Before Evaluating Technology** Let's call it what it is: Your "pilot program" is expensive experimentation designed to avoid making real decisions. You're not testing feasibility—you're postponing commitment.

Every AI project must demonstrate clear line-of-sight to strategic business objectives. This means identifying your North Star Metric before evaluating any technology.

The litmus test: Can this AI initiative move a core business KPI within 90-180 days? If the answer isn't a clear "yes," you don't have a pilot—you have theater.

📊 Real-World Reality Check: A mid-sized insurance company initially launched an AI tool for fraud detection with no clear business owner and no operational metrics. Result? Confusion and zero impact. Consultants blamed "organizational readiness." The real problem? No one owned the business outcome.

In their second attempt, they made "claims resolution time" their singular North Star metric—tangible, customer-facing, benchmarked. Within four months, 18% reduction in average resolution time. What changed? Leadership ownership, not technology.

📊 Make AI Non-Negotiable in Performance Systems Culture change doesn't come from offsites and manifestos—it comes from structural decisions about money, incentives, and consequences.

The hard questions:

• Is AI orchestration in your hiring criteria?

• Are your OKRs explicitly measuring AI adoption?

• Do promotion decisions reflect who's building collaborative intelligence?

• Is your corporate education budget serious about upskilling?

• Are you recognizing AI adoption in performance reviews?

If AI capability isn't in your performance review template, you have consultant-approved theater, not culture. Use the Impact-Feasibility Matrix: Prioritize use cases both valuable (linked to strategic outcomes) and achievable (clear data availability, executive sponsorship, manageable scope).

If a use case can't demonstrate progress within six months, it belongs in your "future opportunities" folder. Culture isn't aspiration—it's architecture.

🤝 Build Cross-Functional Co-Ownership Organizations stumble treating AI as either data science function or business initiative. Reality: AI requires co-ownership.

The winning model creates cross-functional pods with dual leadership—one leader from business domain owning outcomes, one from technical side owning implementation. These aren't handoff relationships. They're co-pilot partnerships where leaders co-design success criteria, co-own metrics, review progress together weekly.

Critical practice: Business and tech leaders must sit in the same room weekly reviewing both technical progress and business impact. No handoffs. No silos. Shared accountability.

Consultants can't build this structure. This is where you reclaim your seat at the table.

📈 Measure Business Impact, Not Model Accuracy Model accuracy is a technical milestone. But businesses don't run on precision scores—they run on customer satisfaction, revenue growth, cost reduction, and operational efficiency.

Track two dimensions simultaneously:

Technical Performance: Model precision, recall, latency, data quality, coverage, system reliability.

Business Outcomes: Time saved per workflow, revenue increase per customer segment, error reduction in critical processes, customer satisfaction improvements.

What value looks like:

· "First-call resolution improved by 12%"

· "Monthly customer service costs dropped 8%"

· "Sales reps gained 2 hours per week for strategic activities"

· "Claims processing time reduced by 18%"

Build these insights into executive-level dashboards reviewed in your leadership cadence. When the COO reviews AI progress weekly, AI transforms from departmental experiment into company-wide priority.

Your dashboards should answer: "Did this move the needle on what matters?" If your data science team can't answer in one sentence, you have an experiment, not an AI initiative.

🛡️ Build Governance as Competitive Moat Competitors can copy your technology stack in six months, hire similar talent, buy the same tools. What they can't copy: organizational trust in your judgment about AI deployment

AI can't scale without trust. Trust comes from knowing your systems are explainable, compliant, and bias-aware. Your most promising AI initiative could become your biggest liability if governance is an afterthought.

Governance checklist:

• Can we explain how this model makes decisions?

• Have we identified and tested for known biases?

• Do we have audit trails and compliance reviews?

• Are we prepared for regulatory scrutiny (EU AI Act, sector-specific regulations)?

• Have we assembled a governance board including IT, legal, and business representatives?

The competitive advantage: Trust isn't a checkbox—it's a capability. Organizations building it early move faster, scale more confidently. Organizations winning in 2026 win because customers, employees, regulators, and boards trust their governance decisions.

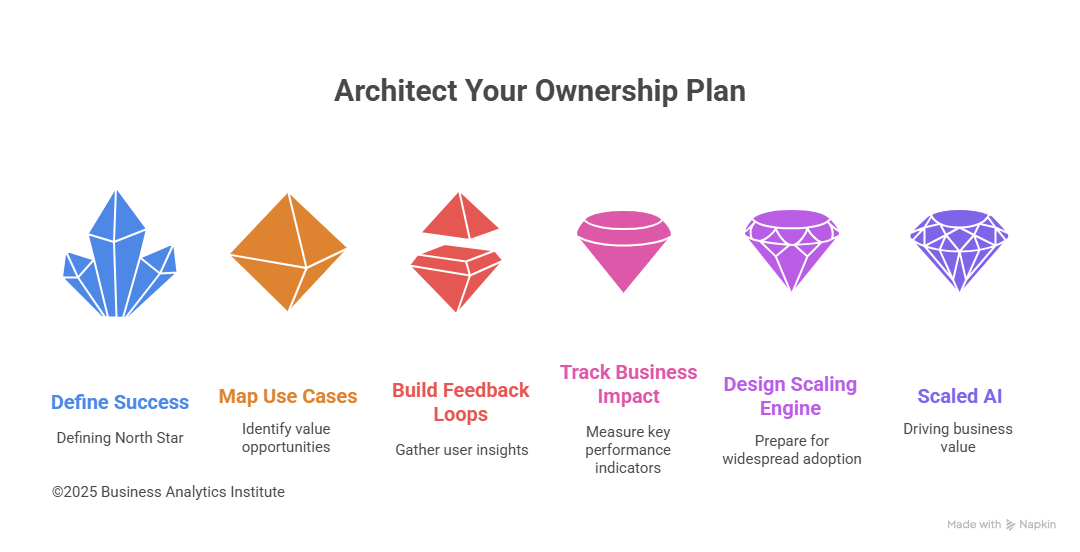

🚀 Your 90-Day Ownership Plan Concepts are valuable. Execution is essential. Here's how to move from reading consultant frameworks to owning your transformation:

Month 1: Define your North Star

Identify 2-3 KPIs defining success this year—business imperatives like customer retention, productivity, operational cost, lifetime value. Not tech goals. Not consultant metrics. Your business KPIs. Map 5-6 high-potential use cases tied directly to your KPIs. Tie each to feasibility, data availability, measurable value.

Month 2: Build accountability

Prioritize 1-2 high-impact use cases with strategic value and technical feasibility. Assign dual ownership (business leader + tech leader). No consultants. Your people. Your ownership. Set up dashboards tracking both AI performance and business outcomes. Establish bi-weekly leadership reviews. Your meetings. Your metrics. Your accountability.

Month 3: Measure what matters

Track technical metrics and business impact. Document what's working. Communicate progress transparently. Turn lessons into reusable templates. Create evaluation rubrics for future use cases.

🎯 The goal: You're not just running projects—you're building an internal engine for AI-powered innovation that consultants can't replicate.

📋 What Boards Actually Evaluate

Boards ask: "Can you systematically create value with AI—without remaining dependent on consultants?" The scrutiny focuses on leadership capability, not technology adoption

Five questions revealing strategic maturity:

Business value linkage: Is AI tied to measurable outcomes—revenue growth, margin expansion, churn reduction? Or sitting in innovation labs while consultants bill monthly retainers?

Portfolio management: Do they rapidly test use cases, learn quickly, kill what doesn't work, scale what does? Or trapped in one consultant-designed pilot for nine months?

Internal ownership: Is AI owned exclusively by data science and consultants? Or are business leaders directly involved, accountable for outcomes?

Executive governance: How frequently does leadership review AI progress, risks, results? Board-level visibility signals AI is core to business performance—not delegated downward.

Institutional capability: Have they developed internal frameworks, evaluation scorecards, governance practices enabling repeatable success? Or do they need consultants every quarter?

⚡ The Transformation

The companies winning with AI aren't chasing complexity or hiring expensive consultants—they're starting simple, learning fast, and scaling with internal ownership.

· They've moved from:

· AI-first → Business-first thinking

· Consultant-driven → Leadership-owned initiatives

· Precision scores → Business impact metrics

· Isolated pilots → Strategic portfolios

· External advisors → Internal cross-functional pods

This isn't about having the best data scientists (though that helps) or the most expensive consultants (that definitely doesn't). It's about having the best management system for converting AI capability into competitive advantage—an architecture you own.

✅ The Choice

Organizations achieving **80-90%**AI adoption aren't using fundamentally different technology. They're using fundamentally different leadership. They've built collaborative intelligence architecture—not by hiring consultants, but by personally owning the transformation.

The pattern: Organizations moving fastest are those where top leadership owns the transformation—they don't outsource it to consultants who disappear after the deck. When the CXO is in the loop, AI projects are treated as business bets requiring strategic judgment, not IT experiments managed by external advisors.

The companies dominating 2026 aren't those with the most AI pilots or biggest consulting budgets. They're the ones with the most disciplined, internally-owned AI transformation.

Where These Conversations Happen

The Business Analytics Institute's Executive Retreat on "Upscaling Talent in the Age of AI" (March 16-17, 2026, Biarritz, France) brings together C-suite leaders to develop their own comprehensive AI frameworks through peer learning and strategy workshops.

What makes this different: No consultant theater. No cookie-cutter frameworks. No dependency. Just CXOs understanding that the critical success factor is personal ownership of AI transformation.

The future belongs to CXOs who orchestrate collaborative intelligence. Not to those who wait for consultants to sell them "magical thinking."

About This Series

This is Part 1 of our five-part series preparing for the Biarritz Executive Retreat on "Upscaling Talent in the Age of AI."

Are you ready to move from AI ambition to AI execution—on your terms?